How to leverage Multi-Stage Caches with Laravel and HAProxy

At Streamfinity we serve millions of requests with rapid response times using several stages of Caches

Roman Zipp, August 8th, 2024

This article will explain in detail how we handle 50+ million requests per month with response caching at Streamfinity using multiple layers.

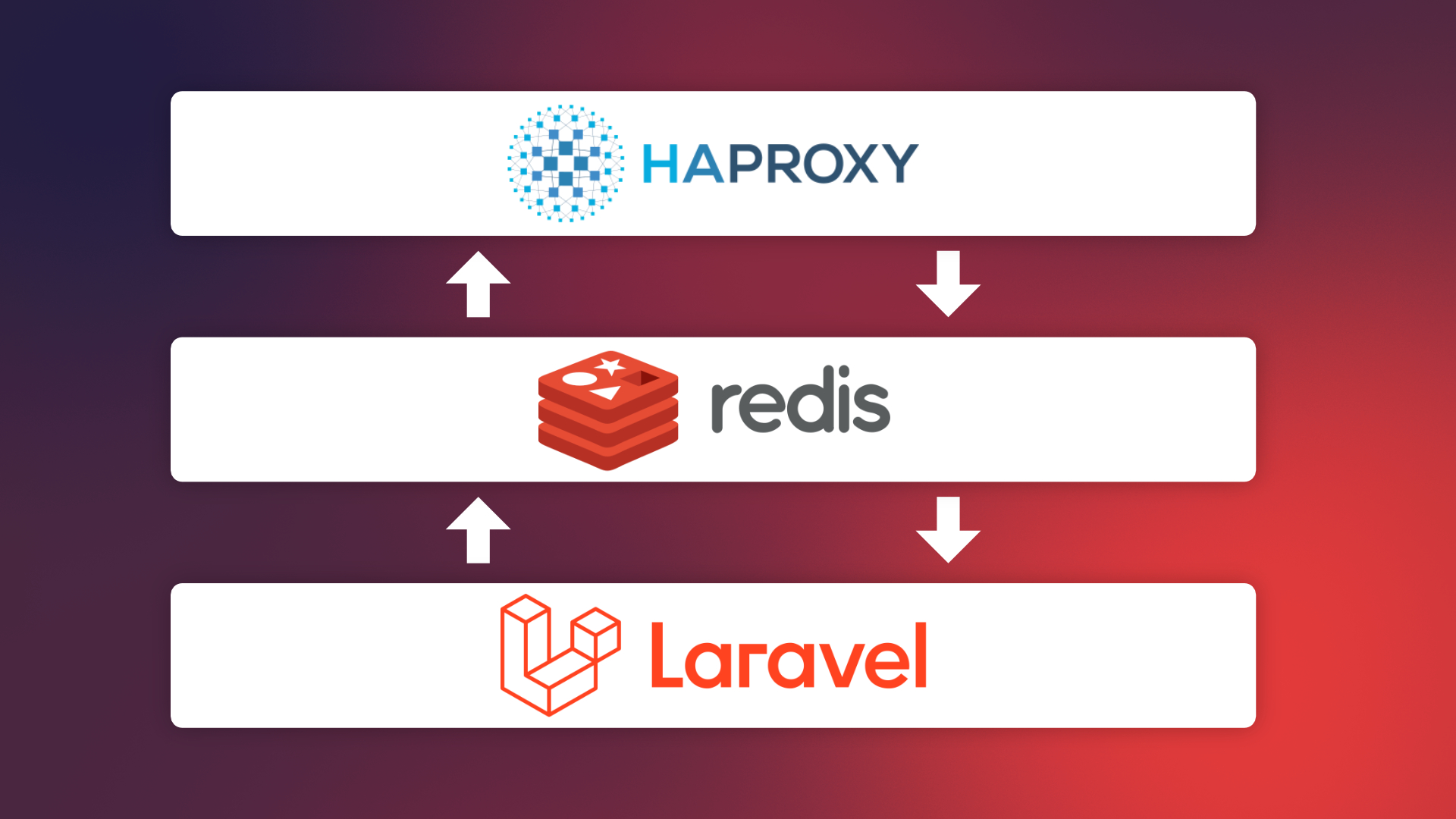

Lets take this absolutely non-scientific flow diagram.

Our goal is, to reduce load on our backend servers and hit the database as little as possible. This can be made possible using multiple stages or layers of cache at different points.

When NOT to cache

There are some caviats to caching responses and data. At first, we want to make sure that we only return cached responses for the right requests. This includes not using response caching with URI indexes on authorized routes because the response content differs for every user sending a Bearer token.

An ideal scenario for response caching is a static endpoint which could be replaced by a simple JSON file. An example at Streamfinity would be the endpoint which returns all possible extension stores for our browser extension stores, such as Chrome Web Store, Firefox AMO etc. The client sends a request with a simplified User-Agent as GET parameter. The endpoint then returns all available stores with the first compatible store featuring a recommended key. This endpoint is unauthenticated and thus qualifies ideally for response caching.

Stage 1: Cloudflare

Static assets like images, JS and CSS files are cached by Cloudflare by default. Every new build of our application generates frontend assets with a unique hash so the clients always receive the latest version independent of Cloudflare's cache.

Stage 2: HAProxy

At first, just some base goals:

We want to cache server responses in HAProxy

We only want to cache the response if a

X-Proxy-Cacheheader has been sent from the server

HAProxy will only cache the data if all of the following are true:

The size of the resource does not exceed

max-object-sizeThe response from the server is 200 OK

The response does not have a

VaryheaderThe response does not have a

Cache-Control: no-cacheheader

Cache Section

The cache section defines the cache store to use. This is the place to configure objects sizes and cache durations. The default max-age can be overridden by the Cache-Control header.

cache bucket total-max-size 512 # mb max-object-size 100000 # bytes max-age 120 # seconds

Backend

Let's look at the following HAProxy backend we use at Streamfinity and intersect every line.

backend http_back_streamfinity_production_backend

http-request cache-use bucket

http-response cache-store bucket if { res.hdr(x-proxy-cache) true }

http-response del-header Cache-Control if { res.hdr(x-proxy-cache) true }

server-template streamfinity-production-backend_ 1-8 _streamfinity-production-backend._tcp.service.consul resolvers consul resolve-opts allow-dup-ip resolve-prefer ipv4 check

Cache Store

http-request cache-use bucket

The cache-use statement instructs HAProxy to use the cache store named bucket for all cache-related actions.

Check for Headers

http-response cache-store bucket if { res.hdr(x-proxy-cache) true }

http-response del-header Cache-Control if { res.hdr(x-proxy-cache) true }

If HAProxy finds the header

X-Proxy-Cachein the server response, we want to store the response (cache-store) in the cachebucketThe original

Cache-Controlheader with the duration should be removed, so we only cache the data on the HAProxy and don't instruct clients to keep the data locally

Add Cache-Status Header

You can also add a header which indicates if a given response originates from the HAProxy cache or was served by the backend server. Thse two lines check if the srv_id fetch method returns the name of a server that was used to handle the request. If no value is returned, it means that HAProxy used the cache.

frontend http_front

http-response set-header X-Proxy-Cache-Status HIT if !{ srv_id -m found }

http-response set-header X-Proxy-Cache-Status MISS if { srv_id -m found }

Stage 3: Redis

We use Redis in a Cluster deployment to cache application data which is accessed from multiple places or cache partial responses which are not eligible for full response caching via HAProxy.